Perspective-aware Convolution

Monocular 3D Object Detection

Demo Video

Motivation

It’s crucial for self-driving car to preciously estimate nearby car or pedestrain. Despite using active sensor such as LiDAR or camera can get an accurate distance to the object; these sensor are typically too expensive or difficult to install. Therefore, we want to use the prevalent sensor: camera to predict 3D object in autonomous driving scene

Introduction - Monocular 3D Object Detection

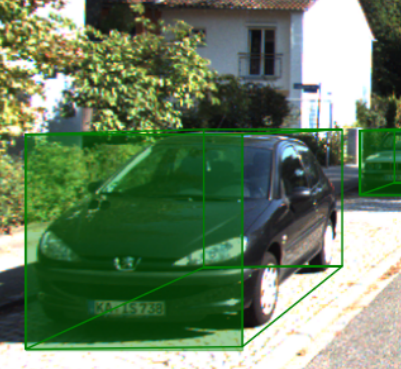

Monocular 3D Object Detection means using single image to detect 3D objects, which is defined by cuboids. For a cuboid, there are nine variable needed to defined, including object location (x, y, z), dimension (w, h, l) and orientation (roll, pitch, yaw). Since most objects on road is parallel to ground, we can ignore pitch and yaw angle, reducing the variables that needed to regress down to seven.

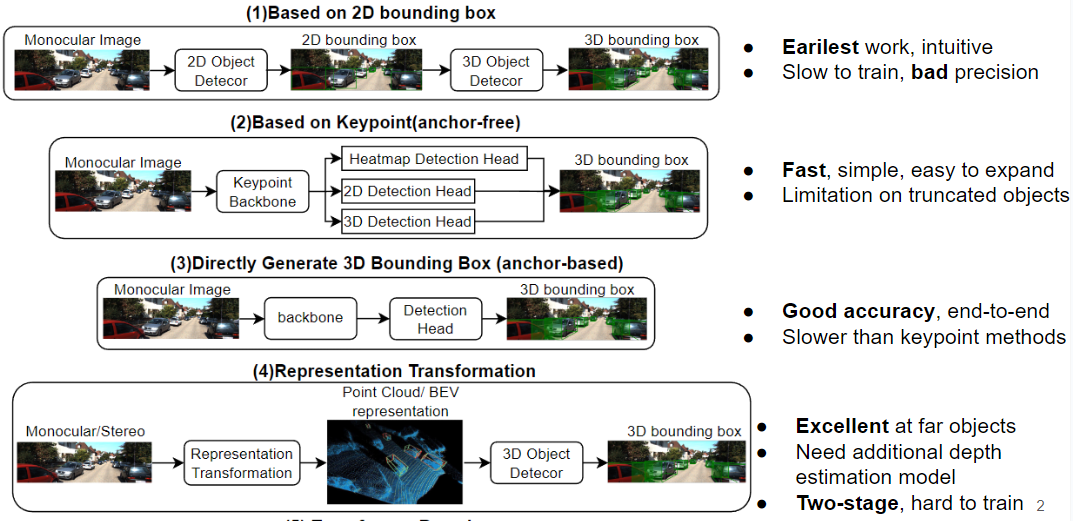

Currently, most work can be categorized into five approaches:

-

Base on 2D Boxes : The most straightforward method is to crop the object from the input image as a region of interest and then detect 3D box geometry based on the feature inside RoI. This approach tends to be sensitive to the accuracy of the detected 2D bounding box, often leading to poor accuracy. Only early research tend to use this approach.

-

Key Point-Based (Anchor-Free): Another method involves identifying key points on the image crucial for detecting the 3D bounding box. These methods use these key points to construct the 3D bounding box. Key points are usually object center or its 3D box corners. This method is fast and simple, making it easy to scale. However, its heavy reliance on key point detection results in lower accuracy, particularly for truncated objects where key points are not visible on the image.

-

Direct Generation of 3D Box (Anchor-based): This approach treats the problem similarly to regressing a 2D bounding box but expands the detection head to accommodate additional variables. It offers good accuracy and usually employs an end-to-end structure.

-

Representation Transformation: Some researchers argue that finding 3D object in 2D images is a suboptimal. They propose first converting the 2D image to a 3D space representation, such as a point cloud or Bird-eye-view plane, and then applying existing 3D object detection methods on it. This two-stage transform—first detect—later results in high accuracy especially for far objects. However, due to its cumbersome architecutre, it’s hard to train

Approach

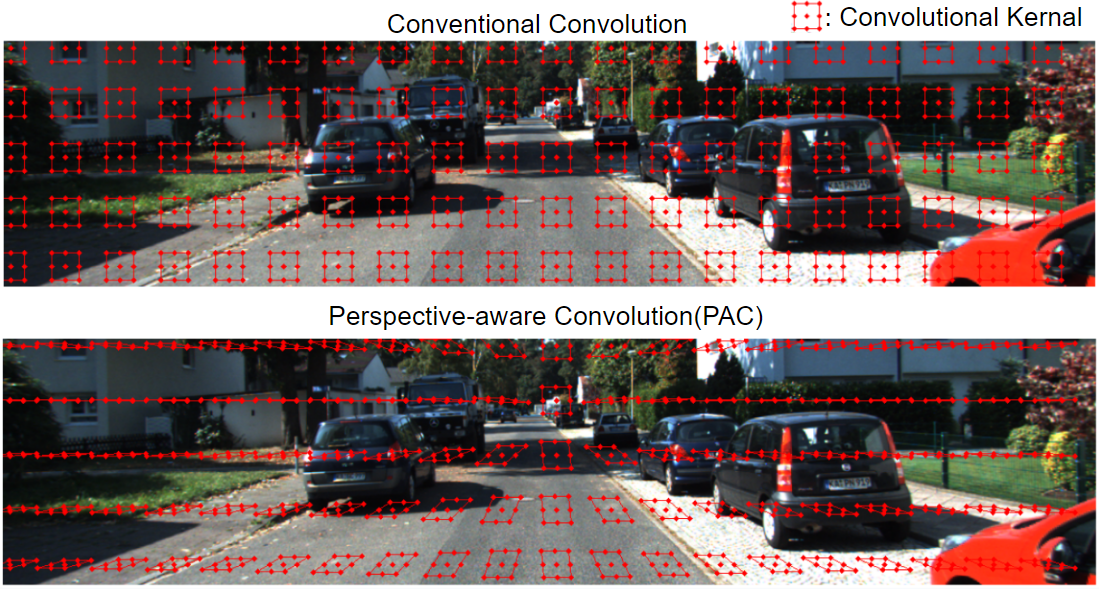

To improve 3D obejct detector, our idea is to endow the network with ability to recongize depth-related patterns in images, such like straight lines on pavement and landmark. We believe these line that paralled to depth-axis can serve as references when predicting object depth.

To achieve this, we propose a novel convolutional layer: skewing the convolutional kernel in the convolution layer to align with the slope of depth axis, rather than maintaining a regular cubic shape. In our experiments, we assume prior knowledge of the scene’s perspective, enabling us to effectively skew the convolution kernel. This method, which we call ‘Perspective-Aware Convolution’ (PAC), will be integrated into existing 3D object detectors. This integration allows us to assess PAC’s effectiveness in improving the detection of 3D bounding boxes, comparing its performance before and after implementation.

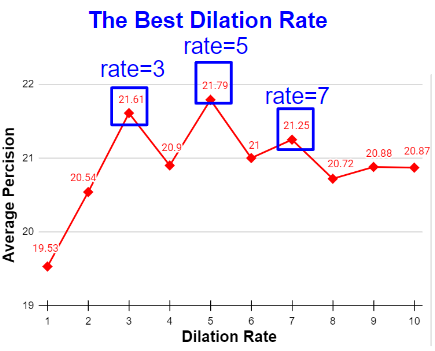

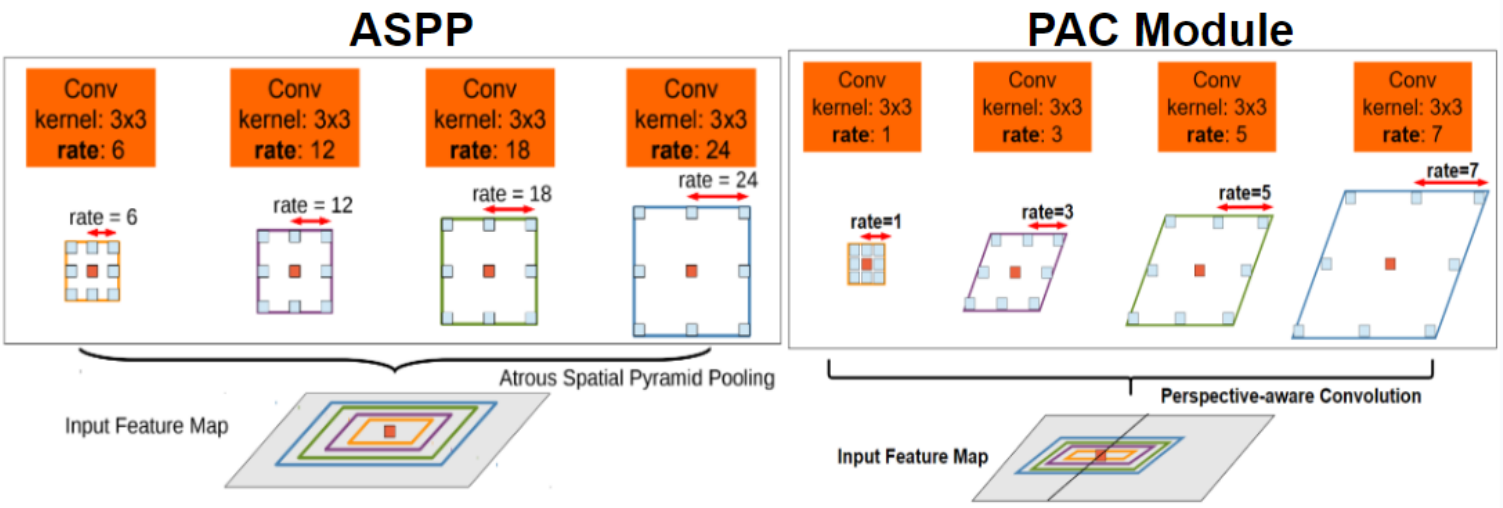

Apart from kernel shape, we also want to find the best kernel size for our network. To this end, we conducted experiments with various dilation rates to evaluate their performance in 3D object detection. Our findings indicate that dilation rates ranging from three to seven generally yield the best results.

Building on these discover, we designed a ‘Perspective-Aware Convolution’ module, closely resembling the Atrous Spatial Pyramid Pooling (ASPP) module. This module utilizes multiple dilation rates for the kernel size, with each kernel’s shape being adjusted to align with the slope of the depth axis, as depicted in the illustration below.

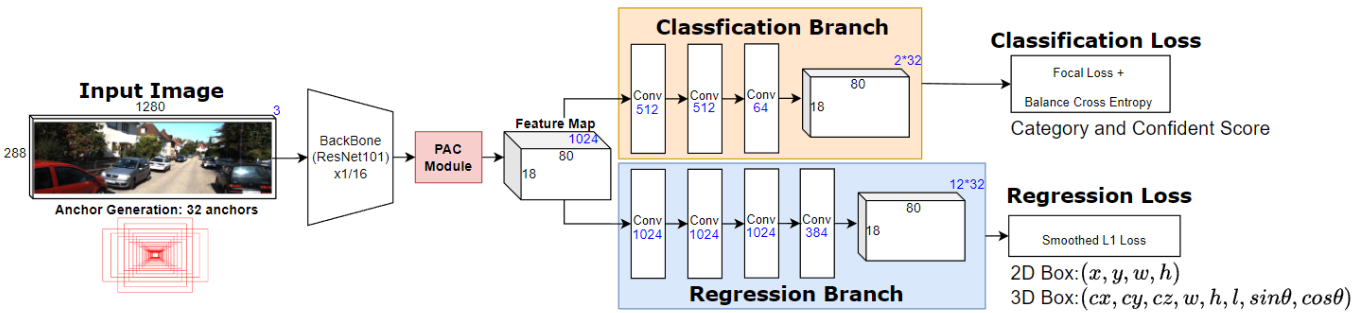

We placed our Perspective-Aware Convolution (PAC) module right after the backbone’s feature extraction stage. This adjustment allows us to inject depth-aware information directly into the extracted features. Subsequently, these enhanced features are fed into the detection head, ensuring that all branches can benefit from it.

Experiment

In this section, we showcase the performance of our PAC module in monocular 3D object detection. We integrated PAC into the Ground-aware network as baseline. Our training and testing were conducted on the KITTI3D dataset, with 3,711 training and 3,768 validation images.

During training, our setup included a batch size of 8 and the Adam optimizer with a learning rate of 0.0001. To increase efficiency, we trimmed the top 100 pixels from images and resized them to 288x1280. We also used horizontal flipping and photometric distortion to enrich the training dataset’s diversity.

Result

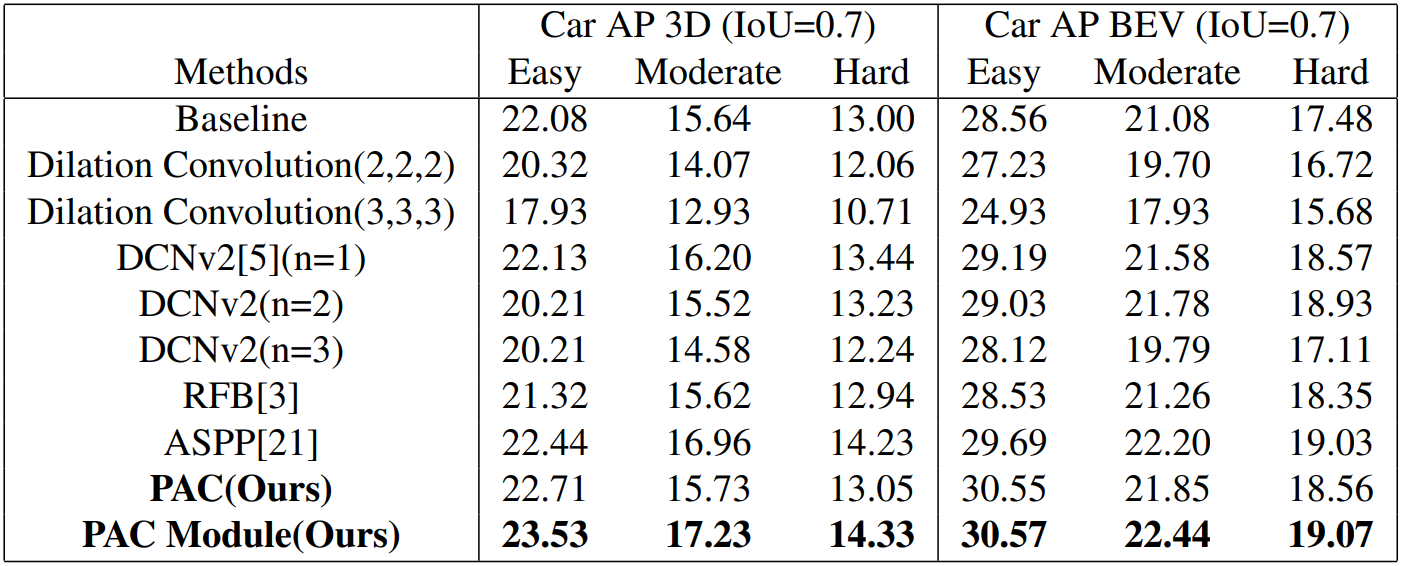

In our experiments, we compared the performance of our Perspective-Aware Convolution (PAC) module against other enhanced convolution layers. We found that adding PAC significantly improves performance over other methods for neural network enhancement.

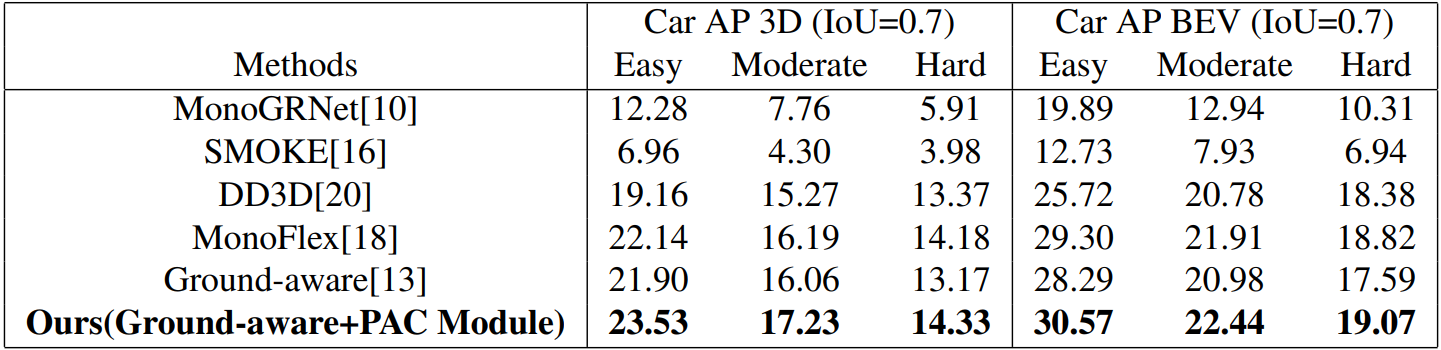

Additionally, we tested the impact of integrating the PAC module into three different 3D detectors. The results were promising: our PAC module enabled these detectors to outperform many existing models in the field.

Conclusion

Our research demonstrates the importance of incorporating depth-related information in monocular 3D object detection. By effectively utilizing or leveraging perspective information, we can significantly enhance a computer’s ability to interpret 3D scenes.